GPU Geometry Map Rendering – Part 2

We left off in part 1 talking about the initial failures with my GPU geometry map shader. I did fail to mention that there was a bright spot the first time I ran the new code – it was amazingly fast. So fast I was able to increase the noise octaves from the 5 that would run reasonably well on the CPU up to 30 and still run at well over 60fps. I have to admit that I spent some of that first 18 hour day just roaming around on a barren, reddish planet. That huge improvement in performance made the pain to come well worth it.

So, at the end of part 1 we set up the C# code for executing the geometry map shader. Now let’s take a look at the shader itself.

float Left;

float Top;

float Width;

float Height;

struct Vertex

{

float4 Position : Position;

float2 UV : TexCoord0;

};

struct TransformedVertex

{

float4 Position : Position;

float2 UV : TexCoord0;

};

void QuadVertexShader(in Vertex input, out TransformedVertex output)

{

output.Position = input.Position;

output.UV = input.UV;

}

float4 QuadPixelShader(in TransformedVertex input) : COLOR0

{

// grab the texture coordinates - you can use them directly, but doing this

// lets you see the value in PIX more easily when you're debugging

float2 uv = input.UV;

// get the coordinates relative to the input dimensions

float x = Width * uv.x;

float y = Height * uv.y;

// translate the final coordinates

return float4(Left + x, y - Top, 1, 1);

}

The Left, Top, Width, and Height floats at the top are the parameters used to define the face-space area. These are the values you’re setting when using the XNA Effect class as mentioned in part 1.

quadEffect.Parameters["Left"].SetValue(-1.0f); quadEffect.Parameters["Top"].SetValue(1.0f); quadEffect.Parameters["Width"].SetValue(2.0f); quadEffect.Parameters["Height"].SetValue(2.0f);

The Vertex struct defines the vertices entering the vertex shader, and the TransformedVertex defines the vertices leaving the vertex shader and entering the pixel shader. Since we’re using pre-transformed vertices the vertex shader doesn’t need to do anything but pass the input values through, which it does very nicely.

The pixel shader isn’t actually all that much more complex. The GPU takes the texture coordinates specified in each vertex and interpolates them for us as it’s rasterizing our quad. Let’s think again in just the horizontal dimension. As mentioned previously, we’ve set up the texture coordinates so they start at 0.0 on the left vertex, and 1.0 on the right vertex. The intent of the shader is to map those values to face-space. In this example the 0.0 should map to -1.0, and the right to +1.0. Also, remember that we’re working with a 5×5 geometry map, and these are the face-space values we expect to get for each of the 5 horizontal positions: -1.0, -0.5, 0.0, 0.5, 1.0.

Walking through the shader for the left pixel we expect to see this:

The uv.x value the shader receives is 0.0 x = Width * uv.x = 2.0 * 0.0 = 0.0 Left + x = -1.0 + 0.0 = -1.0

And for the right pixel we (well, at least I did at one time) expect to see this:

The uv.x value the shader receives is 1.0 x = Width * uv.x = 2.0 * 1.0 = 2.0 Left + x = -1.0 + 2.0 = 1.0

But that isn’t what happens. In fact, the 5 texture coordinates we get are 0.0, 0.2, 0.4, 0.8, resulting in face-space values of: -1.0, -0.6, -0.2, +0.2, +0.8. That did not make any sense to me at all – shouldn’t the texture coordinates be interpolated from 0.0 to 1.0? I spent hours sifting through my code to find out what I had set up wrong. I even took the step of creating a separate bare minimum test app, which I really hate to do. Everything I tried gave me the same results, and I was forced to conclude that somehow the GPU must not be interpolating the texture coordinates the way I expected. I suspected that it had something to do with how Direct X maps texels to pixels, but nothing I tried in that regard gave me the results I needed. I finally caved and started up PIX.

It was a bit daunting at first, but there are some simple tutorials out there that will walk you through the basics. I’m going to go through how I ended up using it, in order to easily run the same tests over, and over, and over again. Start by downloading the test project.

Unzip it into your project folder, open the QuadTest solution, rebuild it, and set QuadTestBad as the startup project. Run it, and verify that you get a pretty box with various shades of blue, white, and magenta.

Now start up PIX. Select File/New Experiment. Use the browse button to navigate to the QuadTestBad.exe you just built. Don’t change anything else for now and press the Start Experiment button. The app should start and you should see the pretty box, as well as some PIX overlay infromation in the upper left. If that’s the case, all is well. If not, you’ll need to figure out why on your own.

Now what we need to do is have PIX grab all the Direct3D data for a single frame. Often you can do this by selecting the “Single-frame capture of Direct3D whenever F12 is pressed” radio button before starting the experiment. That works just fine, and is the method I started out with. But there’s a better way in this case. From the experiment window (the one where you set up the program path), select the More Options button. You should see something like this:

The left tree view might have some different values in it if you changed any options on the initial screen, but the things you need to do here are the same regardless. In the tree view the green T lines are Triggers, and the purple A lines are Actions. When selecting a trigger line you’ll see the right panel which will let you select the type of trigger. Select “Frame” for the trigger type. This will reveal the options for the Frame trigger type. Enter “1” for the frame number. Now select the Action line, which will change the right panel to allow you to select an action. Select the “Set Call Capture” action. Then under Capture Type select “Single-frame capture Direct 3D”, and check the “capture d3dx calls also” box as well.

We’ve just defined a trigger based action. We’ve told PIX to capture Direct3D data on frame #1. Now let’s create a second trigger based action. Press the green T button to create the new trigger. Again select Frame for the trigger type, but this time enter 2 for the frame number. For the action select Terminate Program. So, we’ve now told PIX to exit the program on frame #2. To restate, on frame 1 PIX will capture a single frame of data, and then on frame 2 it will exit the program. You can just go the F12 route in many cases, but when you’re going to be repeating something over and over it can save a lot of time setting up the triggers. In some cases you may have to set the triggers since it can be impossible to hit F12 at just the right moment to capture the data you want.

So, go a head and start the experiment. You should see the QuadTestBad app start up, and then immediately quit. PIX should then display a screen full of data. For this discussion we’re only interested in the panels on the bottom. Events and Details. Events will show you all of the Direct3D calls, and Details will show you details about those events. There is a lot of noise in the D3D calls, so it can be difficult to find what you’re looking for by just scanning through it. There are some buttons at the top of the Events panel that let you move to the next or previous frame, and the next or previous draw call. So, press the button that has the D with a down arrow:

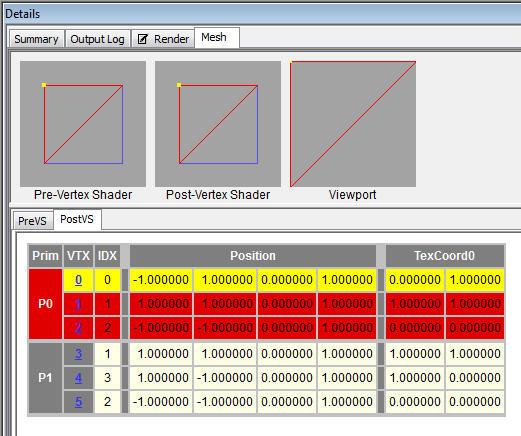

That will take us to the draw call we’re interested in examining. In the Detail panel select the Render tab. You should see the quad we drew. This view will let us step through the vertex and pixel shaders for each pixel displayed in the render tab. You can also look at the Mesh tab to see information at the vertex level, both pre-vertex-shader and post-vertex-shader. Go ahead and do that now, and you’ll see that the screen-space positions and texture coordinates we set up for the full-screen quad did indeed arrive at the vertex shader correctly, and they were correctly sent on to the pixel shader.

Let’s debug a pixel. Go back to the Render tab, mouse over the upper left corner of the image until the X and Y values displayed in the status area show 0, 0. You can zoom into the image if necessary using the buttons at the top of the panel. Once you have the mouse over the top left pixel, right click and select “Debug This Pixel” to open up the Debugger tab. This tab shows a history of the pixel for this frame. Scroll down a bit until you see the DrawPrimitive call. There are several links displayed for debugging the vertices, as well as the pixel. Let’s start with one of the vertices just so you can see it. Click the Debug Vertex 0 link to bring up the vertex shader debugger. Here you can step through the vertex shader code (both forward and backward) and examine all of the variables and registers and such that are involved. Press F10 to step through each line. You’ll see variable values added to the list at the bottom as the values change, or look at the Registers tab to see the individual register values. You can also switch to the Disassembly tab to see the assembly code, which contains comments to help match up registers to variable names in the HLSL code.

To get back to the initial debugger screen press the “back” toolbar button – it’s the one with the green circle and white arrow pointing left.

Now let’s do the fun part and debug the pixel shader. Click the Debug Pixel (0, 0) link,which takes you to the pixel shader debugger. You may have noticed that the pixel shader could be made much more efficient by changing things to use vectors instead of individual floats, and using the incoming texture coordinates directly rather than copying them into a local variable. If you noticed this, you would be right. But splitting things out this way makes debugging in PIX a lot easier, at least for me. You’ll notice that the pixel shader debugger doesn’t display the value for input.UV anywhere, and there is also no way to add a “watch” like you would do in Visual Studio. You could look at the Registers tab and get a good idea, but that can involve a lot of thinking and writing, and examining the assembly code to determine which register is mapped to which variable. So, I found that it helped a great deal to break things out like this because the debugger adds everything to the variable list as you make changes.

If you execute the first line, float2 uv = input.UV, you’ll see what I mean. The uv variable is added to the list and shows the current value, which is (0, 1). Now, if you debug all 5 of the top row of pixels you’ll see that the uv.x values for the pixels are 0.0, 0.2, 0.4, 0.6, 0.8. Why doesn’t it ever reach 1.0? Honestly, I still think it has something to do with texel to pixel mapping, and tried I don’t know how many combinations of shifting things around by half pixels in various coordinate systems, but I still have no idea why it doesn’t make it all the way to 1.0. I’m hoping someone will read this and let me know.

I do know how it’s coming up with those values though. It starts uv.x out at 0, and adds 1.0 / GeometryMapWidth for the next pixel. In our test case GeometryMapWidth is 5, so it’s adding 0.2 each time. If I could make the GPU add 0.25 each time I’d be in business. What I’d like to do is have the GPU add 1.0 / (GeometryMapWidth – 1) each time, but I can’t change the divisor – the GPU is always going find the step by dividing by GeometryWidth. But what if I could change is the numerator? Accessing some little used and rusty Algebra skills I came up with this:

n / GeometryMapWidth = 0.25 n = GeometryMapWidth * 0.25

Our GeometryMapWidth is 5, so n = 1.25. But how do we make the GPU use that as the numerator? Well, as it turns out the numerator in the 1.0 / GeometryMapWidth formula isn’t always 1.0. It’s really the width defined by the texture coordinates from the left and right vertices (in the horizontal case we’re considering). So far the rightmost texture value has been 1.0, and the left has been 0.0. So the formula becomes (1.0 – 0.0) / GeometryMapWidth. If the left coordinate is something besides 0, for example 0.13, the formula would look like (1.0 – 0.13) / GeometryMapWidth.

So, using that knowledge, we can change the numerator to whatever we want by manipulating the texture coordinates. Since the left value is 0.0 and needs to stay that way, we can change the right value to 1.25. The GPU will then calculate the step value as (1.25 – 0.00) / GeometryMapWidth, or 1.25 / 5, which is the 0.25 value we’re looking for! So now this is what our full screen quad definition looks like:

float pw = 1.0f / (Width - 1); float ph = 1.0f / (Height - 1); vertices = new VertexPositionTexture[4]; vertices[0] = new VertexPositionTexture( new Vector3(-1, 1, 0f), new Vector2(0, 1)); vertices[1] = new VertexPositionTexture( new Vector3(1, 1, 0f), new Vector2(1 + pw, 1)); vertices[2] = new VertexPositionTexture( new Vector3(-1, -1, 0f), new Vector2(0, 0 - ph)); vertices[3] = new VertexPositionTexture( new Vector3(1, -1, 0f), new Vector2(1 + pw, 0 - ph));

This generalizes the approach a bit. Instead of hard coding the 0.25 value we can calculate what it needs to be based on the size of the geometry map. We then add that value to 1 to get the final value, for the horizontal dimension. For the vertical dimension we’re actually subtracting it from the bottom coordinate due to the relationship between the different coordinate systems.

So, in the sample code, set QuadTestGood as the Startup Project and run it through PIX. Debug each pixel and you’ll see that the texture coordinates are interpolated like we want them to be.

One final piece of the puzzle though. When we generate the geometry map we need to generate an extra border of vertices around it for use in calculating the vertex normals. We can do this by simply expanding the texture coordinates in each direction by the value we calculated in the previous step.

// if we have a border then expand the texture

// coordinates out to account for it

if (border)

{

vertices[0].TextureCoordinate.X -= pw;

vertices[0].TextureCoordinate.Y += ph;

vertices[1].TextureCoordinate.X += pw;

vertices[1].TextureCoordinate.Y += ph;

vertices[2].TextureCoordinate.X -= pw;

vertices[2].TextureCoordinate.Y -= ph;

vertices[3].TextureCoordinate.X += pw;

vertices[3].TextureCoordinate.Y -= ph;

}

If you want you can run the QuadTestGoodWithBorder project through PIX and verify that it works as well.

Now that we have the right texture coordinates, the rest of the shader just scales the coordinate by the face-space width and height to calculate the correct x and y values to pass to the noise functions. The sample shader currently just returns those values as the color. You’d want to change the render target to floating point, and replace this line:

// translate the final coordinates return float4(Left + x, y - Top, 1, 1);

with this:

return TerrainNoise(float3(Left + x, y - Top, 1));

And create your TerrainNoise function using any of the myriad methods revealed by Google.

So, I guess that’s it. I can’t say I entirely enjoyed this ride, but the destination was worth it. And if anyone wants to explain to me why texture coordinates don’t want to interpolate all the way to 1 on a full screen quad, please feel free. 🙂

4 thoughts on “GPU Geometry Map Rendering – Part 2”

Have you tried using OpenGL, or something which is probably more suitable, like OpenCL?

This would give cross-platform compatibility, and might work a little more predictably.

I’ve never liked OpenGL much, and besides, I wouldn’t be able to create planets on the Xbox :). C# and XNA are a very powerful combination, and suit my needs very nicely.

Great post! Nice!

Comments are closed.